Recurrent Neural Networks (RNN) using Tensorflow

Generating Text using Tensorflow RNN

In the previous article, we have read about Linear Regression and seen examples of linear regression and at last, we have trained Price predicting model using Linear. If you haven't read here the link ->

In this tutorial, we are going to cover:

- Introduction of RNN

- How does RNN work?

- Types of RNN

- Use case of RNN using Tensorflow

- Text Generation using RNN

- Application RNN

Introduction of RNN

Neural networks are of different types, like Convolutional Neural Network (CNN), Artificial Neural Network (ANN), Recurrent Neural Network (RNN), etc.

A Recurrent Neural Network is a type of neural network that contains loops, allowing information to be stored within the network.

In short, Recurrent Neural Network uses their reasoning from previous experiences to inform the upcoming events.

How does Recurrent Network work?

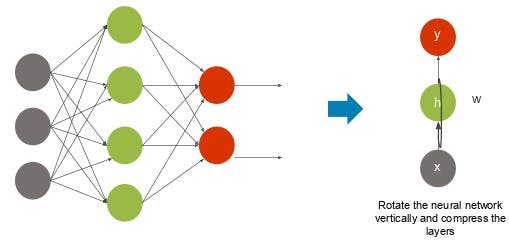

Recurrent Neural Networks can be thought of as a series of networks linked together. They often have a chain-like architecture, making them applicable for tasks such as speech recognition, language translation, etc.

An RNN can be designed to operate across sequences of vectors in the input, output, or both.

For example: A sequenced input may take a sentence as an input and output a positive or negative sentiment value.

Alternatively, a sequenced output may take an image as an input, and produce a sentence as an output.

Let's imagine training an RNN to the word "happy," given the letters "h, a, p, y." The RNN will be trained on four separate examples, each corresponding to the likelihood that letters will fall into an intended sequence.

For example, the network will be trained to understand the probability that the letter "a" should follow in the context of "h." Similarly, the letter "p" should appear after sequences of "ha." Again, a probability will be calculated for the letter "p" following the sequence "hap."

The process will continue until probabilities are calculated to determine the likelihood of letters falling into the intended sequence. So, as the network receives each input, it will determine the probability of the subsequent letter based on the probability of the previous letter or sequence. Over time, the network can be updated to more accurately produce results.

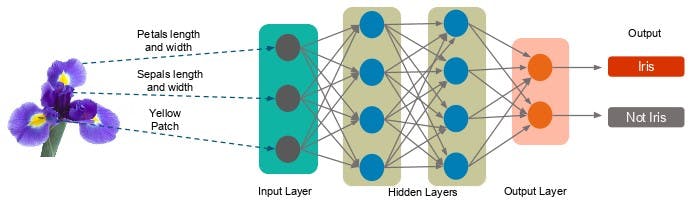

A typical RNN looks like the image shown below:

Types of RNN

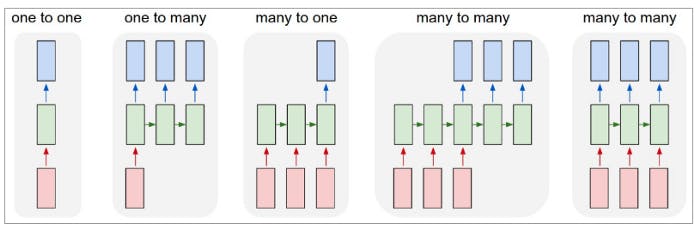

There are different types of RNNs, which vary based on the application:

- One-to-one: It is known as the Vanilla Neural Network, which is for regular machine learning problems.

- One-to-many: Here, there is one input and multiple outputs.

- Many-to-one: This kind of network is used to carry out sentiment analysis.

- Many-to-Many: It is generally used in machine translation.

Use Case Implementation of RNN

Problem Statement - We have gathered data related to milk production over several months. By using an RNN, we want to predict milk production per cow in pounds using a time series analysis.

Text Generation using RNN

An RNN can be used to generate text in the style of a specific author. We will look at how to use a recurrent neural network to create new text in the style of Sir Arthur Conan Doyle using his book called “The Adventures of Sherlock Holmes” as our dataset.

We can get the data from the Gutenberg website. We just need to save it as a text (.txt) file and delete the Gutenberg header and footer embedded in the text.

Creating our dataset

As usual, we will start creating our dataset. In order to be able to use our textual data with an RNN, we need to transform it to numeric values.

To get started we will load in the data and create a mapping from character to integer and integer to character:

with open('sherlock_homes.txt', 'r') as file:

text = file.read().lower()

print('text length', len(text))

chars = sorted(list(set(text))) # getting all unique chars

print('total chars: ', len(chars))

char_indices = dict((c, i) for i, c in enumerate(chars))

indices_char = dict((i, c) for i, c in enumerate(chars))

To get valuable data, which we can use to train our model we will split our data up into subsequences with a length of 40 characters.

maxlen = 40

step = 3

sentences = []

next_chars = []

for i in range(0, len(text) - maxlen, step):

sentences.append(text[i: i + maxlen])

next_chars.append(text[i + maxlen])

x = np.zeros((len(sentences), maxlen, len(chars)), dtype=np.bool)

y = np.zeros((len(sentences), len(chars)), dtype=np.bool)

for i, sentence in enumerate(sentences):

for t, char in enumerate(sentence):

x[i, t, char_indices[char]] = 1

y[i, char_indices[next_chars[i]]] = 1

Training Preparation

Although creating an RNN sounds complex, the implementation is pretty easy using Keras. We will create a simple RNN with the following structure:

- LSTM Layer: will learn the sequence

- Dense(Fully connected) Layer: one output neuron for each unique char

- Softmax Activation: Transforms outputs to probability values

from keras.models import Sequential

from keras.layers import Dense, Activation

from keras.layers import LSTM

from keras.optimizers import RMSprop

model = Sequential()

model.add(LSTM(128, input_shape=(maxlen, len(chars))))

model.add(Dense(len(chars)))

model.add(Activation('softmax'))

optimizer = RMSprop(lr=0.01)

model.compile(loss='categorical_crossentropy', optimizer=optimizer)

# We have use RMSprop optimizer and Categorical Crossentropy loss function

Helper functions

In order to see the improvements our model makes whilst training we will create two helper functions.

i. The first helper function will sample an index from the output(probability array). It has a parameter called temperature which defines the freedom the function has when creating text.

def sample(preds, temperature=1.0):

# helper function to sample an index from a probability array

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

ii. The second will generate text with four different temperatures at the end of each epoch so we can see how our model does.

def on_epoch_end(epoch, logs):

# Function invoked at end of each epoch. Prints generated text.

print()

print('----- Generating text after Epoch: %d' % epoch)

start_index = random.randint(0, len(text) - maxlen - 1)

for diversity in [0.2, 0.5, 1.0, 1.2]:

print('----- diversity:', diversity)

generated = ''

sentence = text[start_index: start_index + maxlen]

generated += sentence

print('----- Generating with seed: "' + sentence + '"')

sys.stdout.write(generated)

for i in range(400):

x_pred = np.zeros((1, maxlen, len(chars)))

for t, char in enumerate(sentence):

x_pred[0, t, char_indices[char]] = 1.

preds = model.predict(x_pred, verbose=0)[0]

next_index = sample(preds, diversity)

next_char = indices_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

sys.stdout.write(next_char)

sys.stdout.flush()

print()

print_callback = LambdaCallback(on_epoch_end=on_epoch_end)

We will also define two other callback functions. The first is called ModelCheckpoint. It will save our model each epoch the loss decreases.

from keras.callbacks import ModelCheckpoint

filepath = "weights.hdf5"

checkpoint = ModelCheckpoint(filepath, monitor='loss',

verbose=1, save_best_only=True,

mode='min')

The other callback will reduce the learning rate each time our learning plateaus.

from keras.callbacks import ReduceLROnPlateau

reduce_lr = ReduceLROnPlateau(monitor='loss', factor=0.2,

patience=1, min_lr=0.001)

callbacks = [print_callback, checkpoint, reduce_lr]

Training a model

For training, we need to select a batch_size and the number of epochs we want to train. For the batch_size I choose 128 which is just an arbitrary number.

model.fit(x, y, batch_size=128, epochs=5, callbacks=callbacks)

Training output:

Epoch 1/5

187271/187271 [==============================] - 225s 1ms/step - loss: 1.9731

----- Generating text after Epoch: 0

----- diversity: 0.2

----- Generating with seed: "lge on the right side of his top-hat to "

lge on the right side of his top-hat to he wise as the bore with the stor and string that i was a bile that i was a contion with the man with the hadd and the striet with the striet in the stries in the struttle

----- diversity: 0.5

----- Generating with seed: "lge on the right side of his top-hat to "

lge on the right side of his top-hat to he had putting the stratce, and that is street in the striet man would not the stepe which we

To generate text ourselves we will create a function similar to the on_epoch_end function. It will take a random starting index, take out the next 40 chars from the text and then use them to make predictions

def generate_text(length, diversity):

# Get random starting text

start_index = random.randint(0, len(text) - maxlen - 1)

generated = ''

sentence = text[start_index: start_index + maxlen]

generated += sentence

for i in range(length):

x_pred = np.zeros((1, maxlen, len(chars)))

for t, char in enumerate(sentence):

x_pred[0, t, char_indices[char]] = 1.

preds = model.predict(x_pred, verbose=0)[0]

next_index = sample(preds, diversity)

next_char = indices_char[next_index]

generated += next_char

sentence = sentence[1:] + next_char

return generated

Now we can create text by just calling the generate_text function:

print(generate_text(500, 0.2)

Generated text:

of something akin to fear had begun

to be a sount of his door and a man in the man of the compants and the commins of the compants of the street. i could he could he married him to be a man which i had a sound of the compant and a street in the compants of the companion, and the country of the little to come and the companion and looked at the street. i have a man which i shall be a man of the comminstance to a some of the man which i could he said to the house of the commins and the man of street in the country and a sound and the c

RNN Applications

A common example of Recurrent Neural Networks is a machine translation.

For example, a neural network may take an input sentence in Spanish and translate it into a sentence in English.

The network determines the likelihood of each word in the output sentence based upon the word itself, and the previous output sequence.

Next: In the next tutorial, we will try to understand Perceptron & Implementation using Tensorflow. We are also going to train a multi-layer perceptron model to recognize the digits image.